Hola, I’m Adam, and I spent a week as a work experience student at Embecosm, attempting to build a face-tracking webcam. Before coming to Embecosm I had no experience of electronics, and my software experience was with Python and scripting languages like AutoIt.

Starting

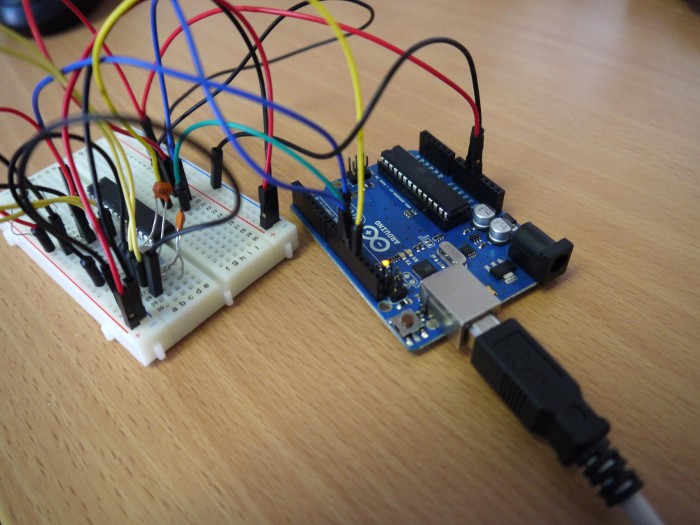

I started by building a simple circuit on an Arduino, a small program that would repeatedly power a set of LEDs on and off; somehow it worked. I had never used the Wire library before, so this must have been a good start. If it was not for Simon Cook from Embecosm and his knowledge of electronics I think I would still be deciding whether the longer pin on an LED was positive or negative.

Shrimping

On my first day, we ordered the components for a Shrimping.it circuit. Some of you are probably wondering what Shrimping is. Shrimping is pretty much a breadboard version of Arduino Uno, which can be made at one tenth the price of the official Arduino. Loads of information can be found at the official Shrimping website www.shrimping.it. The components we needed for a Shrimping.it board were:

- 16MHz quartz crystal

- ATMega328-PU microcontroller

- 10μF 50V electrolytic capacitor

- 6mm tactile switch

- 22pF 50V ceramic capacitor (2 off)

- 100nF 50V ceramic capacitor (4 off)

- 10K 0.125W resistor

- Breadboard

(We should have ordered some breadboarding wires for connecting and LEDs for testing, but we already had plenty). To allow for problems, we ordered enough components to make two Shrimping.it boards.

When the components arrived on my second day, I began building a Shrimping circuit. After many, many hours of wiring, flashing and disassembling the Shrimping board, Simon and I finally got the Shrimp accepting uploaded code.

Problems we encountered

It wasn’t all plain sailing. The first problem was that we had ordered plain ATMega328 chips, without a bootloader pre-installed. So we had to use the standard Arduino as a programmer to flash the new microcontrollers.

We then found that the Shrimping.it board would just not run any code. Even if we programmed the ATMega328 on the Arduino, then unplugged the chip and put it on the Shrimping board, nothing would happen. The problem was the tactile switch, which had 4 terminals, two on each side. We had it positioned rotated by 90°, so instead of acting as a switch, it was acting as a wire, and the microcontroller was permanently in reset. Fixing this, we were soon able to run programs.

However, still we could not program the board through the USB to UART adapter. Eventually we found it was a problem of labeling. We thought we had connected the transmit and receive pins of the UART (TXD and RXD) correctly. However the meaning of transmit and receive depends on which end you are looking from. Swapping over the wires gave us a fully working Shrimping.it board.

It took us about a day to get to this stage, using only a multimeter and LEDs for testing (several of which we blew up by connecting across what turned out to be the power rails).

Building the face tracker

This required some more parts

- two standard servo motors

- a webcam

We got the webcam from Amazon and the servos from eBay. Here was the only major issue we had encountered all week: the promised two day delivery for the servos did not happen, and at the time of writing they still have not arrived.

We used the facetracker from Instructables. This uses the OpenCV open source computer vision library to do the face recognition and then sends position information to an Arduino over its serial port. We first used the standard OpenCV example to check that the face tracker worked. It did, although with a worrying tendency to recognize the office wall clock and expensive equipment, and ignore people wearing glasses!

Connecting to the Arduino

The Instructables example modifies the standard face tracker example to drive the serial interface. It uses the Serial C++ library for Win32 written by Thierry Schneider, which is highly specific to Microsoft Windows. However, the interface it offers is very simple, particularly since the only methods actually used are connect(), disconnect() and sendChar(). For Linux all we need is to use the relevant device (/dev/ttyACMn or /dev/ttyUSBn) with standard open(), close() and write() calls and a call to system() to use stty to set the line parameters. For example:

int connect (const char *portname,

int rate,

serial_parity parity)

{

string portstr = portname;

string ratestr =

static_cast<ostringstream*>(&(ostringstream() << rate))->str();

string comm = "stty -F " + portstr + " cs8 " + ratestr

+ " ignbrk -brkint -icrnl -imaxbel -opost -onlcr -isig"

+ " -icanon -iexten -echo -echoe -echok -echoctl -echoke"

+ " noflsh -ixon -crtscts";

std::cout << comm << endl;

if (-1 == system (comm.c_str()))

{

cerr << "ERROR: stty failed: " << strerror (errno) << endl;

}

handle = open (portname, O_RDWR);

if (-1 == handle)

{

cerr << "ERROR: open failed: " << strerror (errno) << endl;

}

}

This is the most complex of the three functions.

We also modified the face tracker code to use this new header, and, since it had the device to use hard-coded, it world take a command argument for the device:

if (argc != 2)

{

cerr << "Usage: techbitarFaceDetection " << endl;

exit (1);

}

and

if (arduino_com != 0)

{

//Standard Arduino uses /dev/ttyACM0 typically, Shrimping.it

//seems to use /dev/ttyUSB0 or /dev/ttyUSB1.

arduino_com->connect(argv[1], 57600, spNONE);

}

The one final change we needed was to bring this code back into line with the standalone face detect example. For some reason it uses 3 as the number of the camera to use when calling cvCaptureFromCAM(). We changed it back to use -1 as in the original code.

All the code can be downloaded from Embecosm’s GitHub repository.

Getting it to work

We were unable to complete the final stage of the project since the servos had not arrived. However, we modified the code to drive 4 LEDs instead, to give an indication of whether the code was driving the X or Y servos.

We could not log results back to a console since the Face Tracker was using the serial connection. So to see what the values were we used the 4 LED’s to display the values one nibble at a time. With this we could get a feel for the X and Y values being passed by the face tracker.

Conclusions

I’ve found the work interesting and amusing – unlike anything I’ve done before. It has helped to confirm my desire to follow computing as a career path. Next year I move to a sixth form college where I’ll be studying web and games development and mathematics for ‘A’ level.

Adam is a student from a local school, who has just completed his GCSE examinations. He spent a week in early July 2013 as a work experience student with Embecosm.