Introduction

My name is Pietra Ferreira Tadeu Madio and I’m an AI Research Engineer working at Embecosm. During the summer I was able to start working full-time on my own AI-driven project.

Artificial intelligence is typically associated with high power computing and big data, but there is an increasing need for development of AI systems for embedded applications. Embecosm has always been highly regarded for its specialisation in embedded devices. Therefore, we believe that applying this expertise to the growing field of AI will be highly beneficial to both communities.

The Project

Overview

For the project, we decided to implement facial recognition on Google’s Edge TPU, with the goal of exploring a combination of AI and embedded systems.

Google’s Edge TPU

I recently found out that Google released a development board with its main purpose being to prototype on-device machine learning models. The board is capable of high-speed machine learning inference: its coprocessor is capable of performing 4 trillion operations per second (TOPS), at low energy (0.5 watts for 1 TOP, giving 2 TOPS per watt).

My supervisor and I, Lewis Revill, were instantly excited and luckily enough, Embecosm agreed to grant us with the board. Now we needed to decide on what to do with the board.

More information about the board can be found here: Dev Board | Coral.

Facial Recognition

The hardware design of the Edge TPU was developed to accelerate deep feed-forward neural networks such as convolutional neural networks (CNN) as opposed to recurrent neural networks (RNNs) or Long Short-Term Memory models (LSTMs). Therefore, computer vision seemed like an ideal topic to explore.

Originally, we pondered over the idea of working with sound. However, for the reasons mentioned above, models that process sound are usually out of the Edge TPU’s capabilities as they normally rely on time based architectures such as RNNs or LSTMs.

The reason we chose facial recognition was that after going through the Edge TPU’s documentation, we noticed that while Google had provided demos for classification and object detection models, there was a lack of facial recognition demos. Face recognition is closely related to image classification and object detection, so it seemed like a reasonable next step.

Setting up the board

For the remainder of this blog post, I’ll be recounting the process that we went through to start using the Coral board. To set up the board we simply followed the tutorial from the Google Coral website, which will be described below. The full instructions can be found here: Get Started | Coral.

Requirements

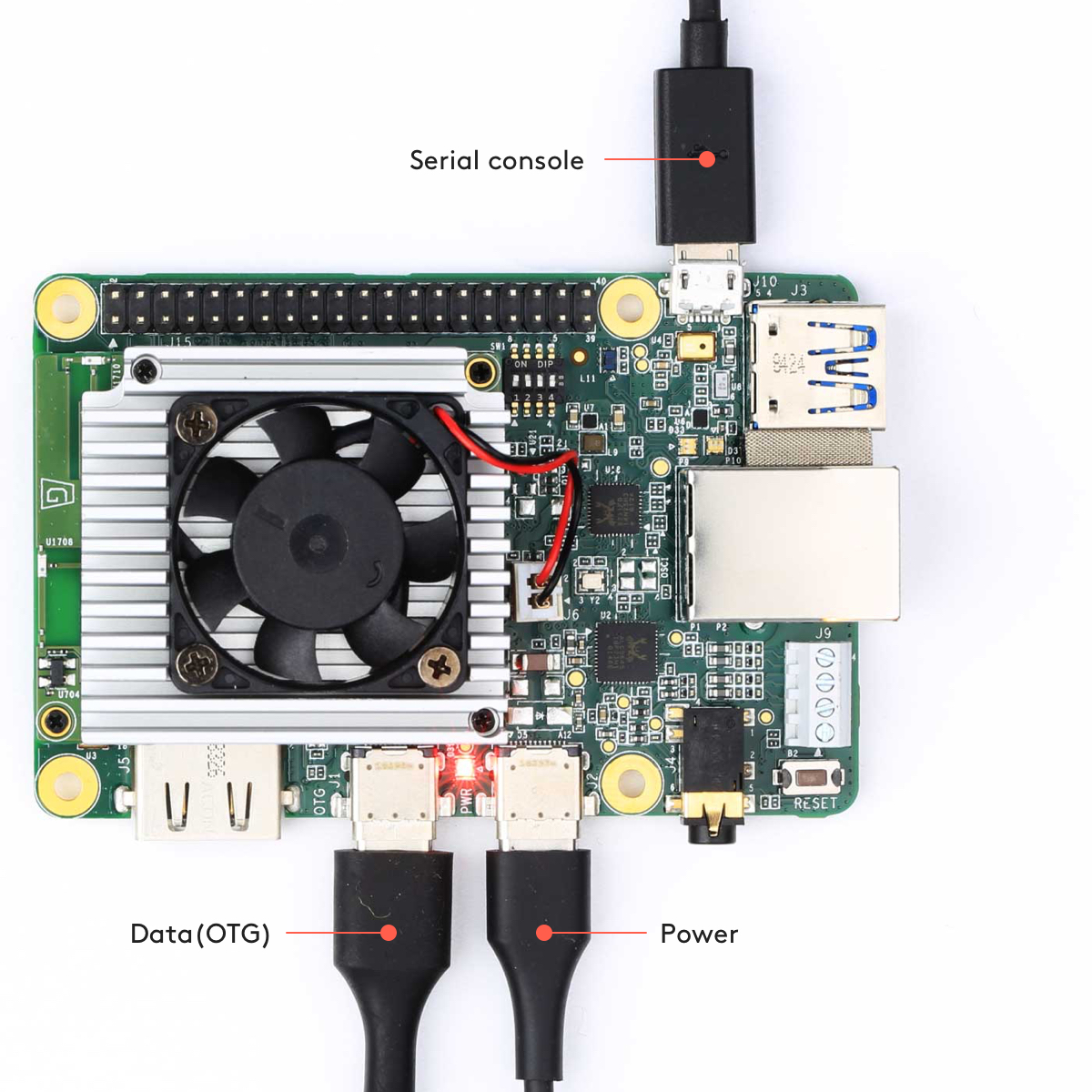

The board can be used from both Linux and Mac machines. All the commands below were run on a Mac so they would need to be modified if they need to run on Linux. We used a USB-A to USB-microB cable, a USB-C to USB-C cable and a 2-3A (5V) USB Type-C power supply. Additionally, we needed to install a serial console program such as screen (which luckily enough is available on Mac by default) and the latest fastboot tool.

Starting the board

To be able to flash the board, we had to install the udev driver as it is a requirement to communicate with the Dev Board over the serial console. We then had to connect the USB-microB cable to connect the computer to the serial console. The LEDs started to flash, leading us to the next step which was to run the command screen /dev/cu.SLAB_USBtoUART 115200 (on Mac).

The last step was to power on the board. We plugged in the 2-3A power cable to the USB-C port labeled “PWR” and then the USB-C cable to connect the computer to the USB-C data port labeled “OTG”.

Booting

Now that the board was connected, we had to download and flash the system image. To do that we downloaded the image by running curl -O https://dl.google.com/coral/mendel/enterprise/mendel-enterprise-chef-13.zip, unzipped the file, and ran bash flash.sh from within the mendel-enterprise-chef-13 folder.

When this script is done, the system reboots and the console prompts to login. The default login and password are both the word mendel.

Connecting to the Internet

The board supports wireless connectivity, so it wasn’t too hard to connect it to the internet. We simply ran the command nmcli dev wifi connect <NETWORK_NAME> password <PASSWORD> ifname wlan0 and then verified it worked by running nmcli connection show.

Mendel Software

To ensure that we had the latest software available, we updated the packages with the commands: echo “deb https://packages.cloud.google.com/apt coral-edgetpu-stable main” | sudo tee /etc/apt/sources.list.d/coral-edgetpu.list then sudo apt-get update followed by sudo apt-get dist-upgrade.

To be able to connect to the board through ssh, we installed the Mendel Development Tool (MDT) as well. We ran pip3 install —user mendel-development-tool on the host computer and then we could disconnect the microB-USB from the serial console and open a shell using MDT over the USB-C cable using mdt shell. Now MDT generated an SSH public/private key pair that was pushed to the board’s authorized_keys file, which then allowed us to authenticate with SSH.

Running the Demo Application

Finally we can test by running a demo model. We connected a monitor to the board through the HDMI port and executed the demo with the command edgetpu_demo —device. The result was a recording of cars displaying on the monitor, with a MobileNet model executing in real time to detect each car. The following page contains more detailed demos and more information about supported models: Demos | Coral.

The next blog post in this series will be a more detailed overview of the subject of facial recognition and how it will be applied to the board.